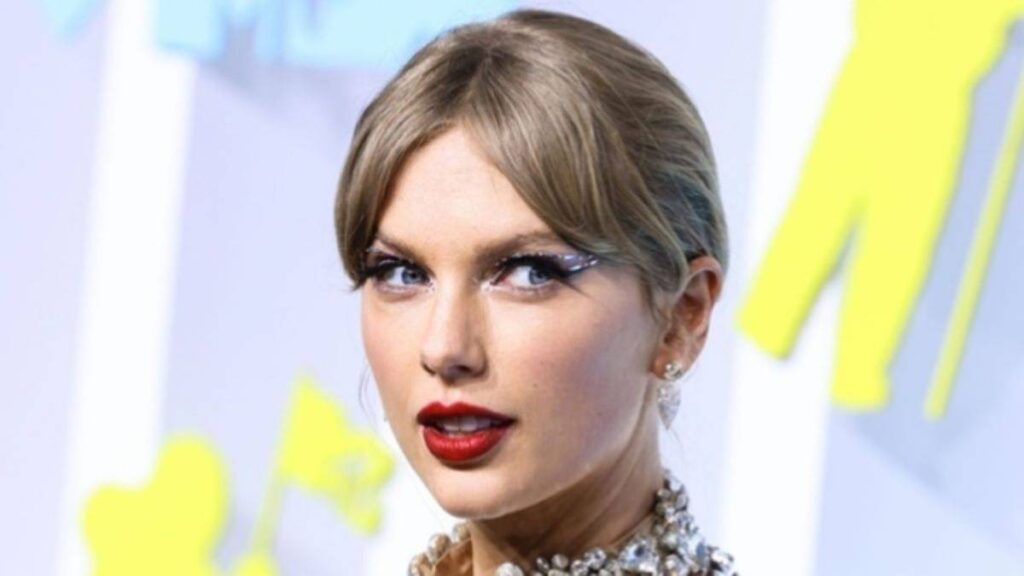

Pornographic images of Taylor Swift have surfaced online, stirring lots of controversy. Except they aren’t hers. They are deepfakes. Deepfakes are images or recordings that have been altered to show someone doing something they haven’t done or said. In the case of Taylor Swift, they are AI-generated images of the superstar singer.

The images have once again brought concerns about digital privacy protections to the fore. Victims have also blamed social media companies for a lack of sufficient vigilance in dealing with the issue.

The videos, whose creator is still unknown, had enjoyed wide publicity before the host social media companies like X stepped in. X said their team works hard to remove every non-consensual explicit movie or photo. They reiterated that this type of media is strictly prohibited.

“We are committed to maintaining a safe and respectful environment for all users,” read a statement from the company.

ALSO READ: NYTimes Under Fire for Publishing Essay Speculating Taylor Swift’s Sexuality

However, concerned stakeholders have yet to be impressed with the swiftness of social media companies in dealing with the problem. For example, one such image of a Taylor Swift deepfake amassed over 47 million views before X took it down from their site. Taylor Swift hasn’t yet commented on the happenings.

The multiple award-winning singer isn’t the only celebrity target for the deepfake creators. Many other high-profile personalities have had their deepfakes all over the place.

But celebrities are not the only category of victims. Regular people have been targeted by deep fake creators who are widely believed to bear misogynistic leanings.

A New York City-based lawyer, Carrie Goldberg, has handled many cases for deepfake victims. She told reporters that she has fought for many non-celebrities, especially children who have been victims of tech abuse.

POLL — Is Artificial Intelligence a Net Positive or Negative for Mankind?

“Our country has made so much progress in banning the nonconsensual dissemination of nude images,” she acknowledged. “But now, deepfakes are sort of filling in that gap,” she said.

Goldberg is referring to the lack of adequate legal protection for deepfake victims. These altered images and videos have steadily increased in the past few years.

Reports show that from January to September 2023, 244,465 deepfake videos found their way into the internet. These videos were housed by over 35 websites dedicated to publishing deepfakes.

Only ten states in the country have laws that criminalize and punish the production and dissemination of deepfakes. These States include Virginia and Texas. But there is none at the federal level.

Although stakeholders have decried poor legal attention to this criminal trend, the states that have made laws to deal with the issue are a source of hope. Also, a recent move by New York congressman Joe Morelle to introduce legislation to tackle the problem was refreshing.

ALSO READ: Expert Claims Image of Trump and Epstein on a Private Jet is AI-Generated

The Preventing Deepfakes of Intimate Images Act he unveiled will criminalize the non-consensual spread of deepfake images online. However, since the House referred the bill to the Judiciary Committee, little news has come out of it.

While the political elite struggles to produce federal and state laws to nip the challenge in the bud, more people are falling victim. Most of whom are women.

Strong legislation will go a long way in ensuring the protection of women from online violence. Women’s rights activists are patiently waiting for a breakthrough.

You Might Also Like:

Court Sentences Real Estate Developer Found Guilty in $1.4 Billion Fraud Case

Southern California Residents Raise Alarm Over Foul Smell Escaping From Landfill

Voting Company Sues Pro-Trump OAN for Engaging in “Criminal Activities”

Arkansas Authorities Capture Two Jail Escapees After Almost 40 Hours

These States Pay New Residents $20,000 to Settle Down